Killer apps, literally: Wearable and smartphone tech on the battlefield

Google Glass, smartphone tech could give soldiers an augmented view of the world.

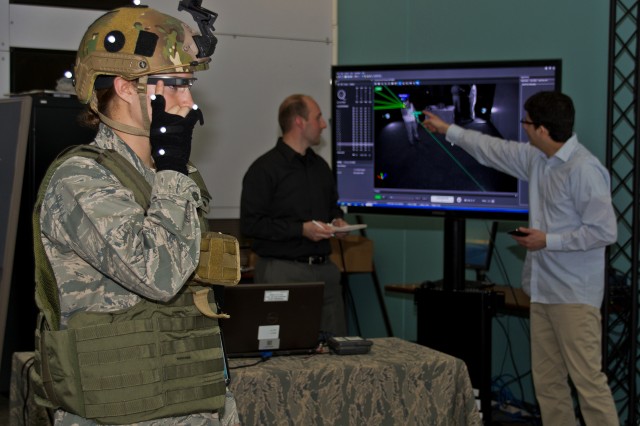

Staff Sgt. Vincent Kelly, of A Company, 1st

Battalion, 29th Infantry Regiment, receives real-time imagery using the

Small Unit Leader Situational Awareness Tool during a Maneuver Battle

Lab demonstration on May 13, 2014, at McKenna Military Operations on

Urban Terrain training area at Fort Benning, Georgia.

The US military has been experimenting with heads-up data displays for years, but now the services are looking to cheaper alternatives to custom-built systems in the consumer electronics realm. In the not-too-distant future, when an Army squad leader wants to know what’s up ahead, he or she may reach for a smartphone or a Google Glass device instead of binoculars.

The US Army’s Maneuver Battle Lab at Fort Benning, Georgia, recently demonstrated one example of this adoption of off-the-shelf mobile and wearable tech. The Army is evaluating the use of off-the-shelf smartphone technology as a handheld “situational awareness tool” by using an app to connect to video feeds from robots on the ground and in the air.

Drones for eyes

The Small Unit Leader Situational Awareness Tool (SULSAT), an experiment of the Army’s Concepts Experimentation Program, got its first field demonstration at Fort Benning’s McKenna Military Operations Center on the Urban Terrain training area on May 13. Using a wireless connection to a tactical robot's or a small drone’s control system, SULSAT pulls video to a squad or platoon commander where they are—instead of them having to look over the shoulder of the operator or having to pass requests for instructions over a radio.The $1 million SULSAT program, according to Army research and development budget documents, is part of an effort to better integrate robotics systems at the lowest level within the Army’s command structure—giving sergeants a pocketable tool that allows them to get a handle on what’s over the hill or around the corner. It can be used to clear routes for a squad or platoon, to identify potential improvised explosive devices, or just to get a handle on unfamiliar surroundings.

The main drawback of the current prototype is the limited range of its wireless connection. First Lieutenant Brandon Slusher, an officer with A Company, 1st Battalion, 29th Infantry Regiment, praised the tool, but told the Army News Service that the platoon commander still has to stay close to the tactical robotic control operator to get the video feeds.

Other goals of the program, beyond the live video feeds, include 3D virtual reality views built from air and ground sensors. In March, the Army posted a request for a system that can take in LiDAR and other sensor data from ground robots and drones to build a visualization of terrain, internal and external structures of buildings, and of potential threats—including automatic recognition of moving targets. This data would be pushed out to soldiers on their handheld devices.

Ok, Glass—bombs away

Enlarge / A test of the Google Glass-based BATMAN II at the Air Force Research Lab (AFRL) at Wright-Patterson Air Force Base.

Wright Patterson Air Force Base

Enlarge / The logo of the Air Force's BATMAN program.

Wright Patterson Air Force Base

Google Glass is also being picked up on by DOD contractors for other potential uses. At the Special Operations Forces Industry Conference on May 20 in Tampa, Florida, the Battelle Memorial Institute—which operates the National Biodefense Analysis and Countermeasure Center and a number of other national labs—demonstrated Google Glass as part of a chemical-biological-radiological detection suite. The Glass apps, called Tactical Augmented Reality Applications (TARA), use video recognition and a wireless interface with chemical, biological, and radiological/nuclear sensors to give soldiers and other first responders a form of augmented reality—a heads-up display that presents information based on video analysis and sensor data.

In an interview with Ars, Darren Wolfe, of Battelle, said that the wireless interface to Glass could allow someone testing an area for potential hazards to quickly assess if there were hazardous agents present. “Instead of having to look at a readout, it gives them a simple color indicator to tell them if the instrument tests positive for an agent,” he said. “It will flash up red on the Glass if there’s a hazard.” This allows the person on scene to continue to focus on the task of containing or disposing of contaminated objects.

Another feature of TARA, useful on both the battlefield and in possible hazardous materials incidents, is its video recognition capability. The video from the Glass camera can be used with facial recognition software to alert soldiers or law enforcement officers when a person of interest is spotted, and to identify improvised explosive devices, hazardous chemical placards, and equipment potentially used for the construction of weapons of mass destruction. The Glass display can then provide a checklist of how to deal with the IED, or other items, based on visual analysis.

TARA can be used in combination with another application built with consumer-targeted hardware—the Oculus Rift virtual reality headset—as part of the Battelle Immersive Training Environment (BITE). Using Oculus’ built-in head sensors and a pair of handheld sensors, a user can interact with virtual objects in HAZMAT, IED, and WMD training scenarios and get simulated data from the Google Glass display.

No comments:

Post a Comment